Welcome to AutoTransformers¶

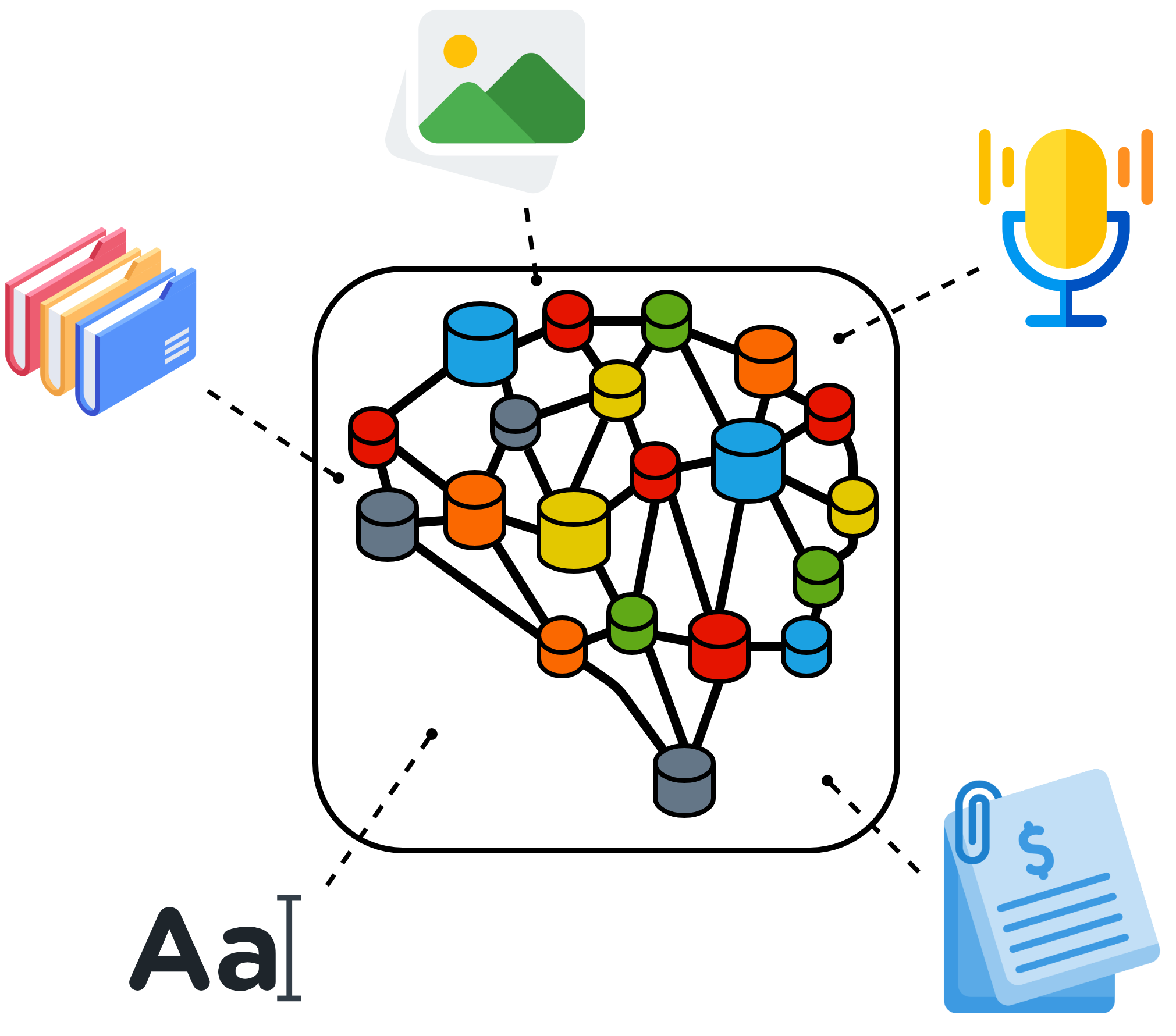

Transform data into high-performance machine learning models using only a few lines of code. No machine-learning expertise required.

The AutoTransformers library automatically transforms data into ML models that can, later on, be used to predict new data and automate tasks such as text classification, document information extraction etc. The AutoTransformers library not only supports many different domains such as text, documents or images but creates models also with very high performance using only little amount of training data. Our research department ensures that the AutoTransformers library provides the highest performance by implementing and testing cutting-edge technologies and research using our internal DeepOpinion Benchmarks that include over 40 real-world datasets. Additionally, only a few lines of code are needed to use the AutoTransformers library and training as well as predicting new data can be executed completely on-premise such that your data is never shared with any 3rd party provider.

Note

The AutoTransformers library is also the backbone of our no-code ML solution DeepOpinion Studio (https://studio.deepopinion.ai/) which can be used to create high-performance models without writing a single line of code.

Key Features¶

Here we list some of the core features of the AutoTransformers library:

Automatically creates high performance machine learning models

The

at wizardgenerates the training script, prediction script as well as the dataset template and a README for you to reduce the development time furtherHigh performance also with little labeling effort through features such as Active learning

One API for many domains such as text, documents or images

No ML expertise and only a few lines of code are required

100% on-premise execution

Supports parallel task prediction (e.g. classification + information extraction) depending on your data and your requirements.

Open ecosystem and 3rd party integrations (e.g. WandB, ClearML)

Out-of-the-box distributed execution on multiple GPUs

Automatic checkpointing to stop and continue training at any time.

Fully flexible configuration for high customizability, custom implementations etc.

Getting Started

Tutorials

- Simple Training

- Multilabel text classification

- Multi-Single label text classification

- Document Information Extraction (DIE)

- Multitask Models

- Configuration Options

- Saving, loading and checkpointing models

- Progress tracking

- Active Learning

- Text Information Extraction (TIE)

- Document Classification (DC)

Developer API